3 Simple Steps to Build an MCP Server

- Author:Arpit Kabra

- Published On:Jun 16, 2025

- Category:MCP (Model Context Protocol)

The Future of Tool Integration with LLMs

All of us might have used AI at our level, whether for coding or making ghibli images, we and almost all our family members might have used tools like ChatGPT once in our life.

It seems very magical, the work which used to take almost a day would complete within a few minutes.

Till this all was good, the developers were implementing the AI in their projects which was marketed as Integration of software in their product, or having all the software working in a single software with the power of AI.

But then why does a new concept arise which is MCP? Why did it gain so much hype within a few days?

All will be answered one by one in this blog. At last We will be building an MCP server in just 3 simple steps. So stay tuned till the end.

First we should look at what was the problem, after which you can easily understand what MCP is and why we need that.

The Problem

The LLMs like OpenAI and Gemini are best at giving the output, but the real problem comes when we implement that in our projects.

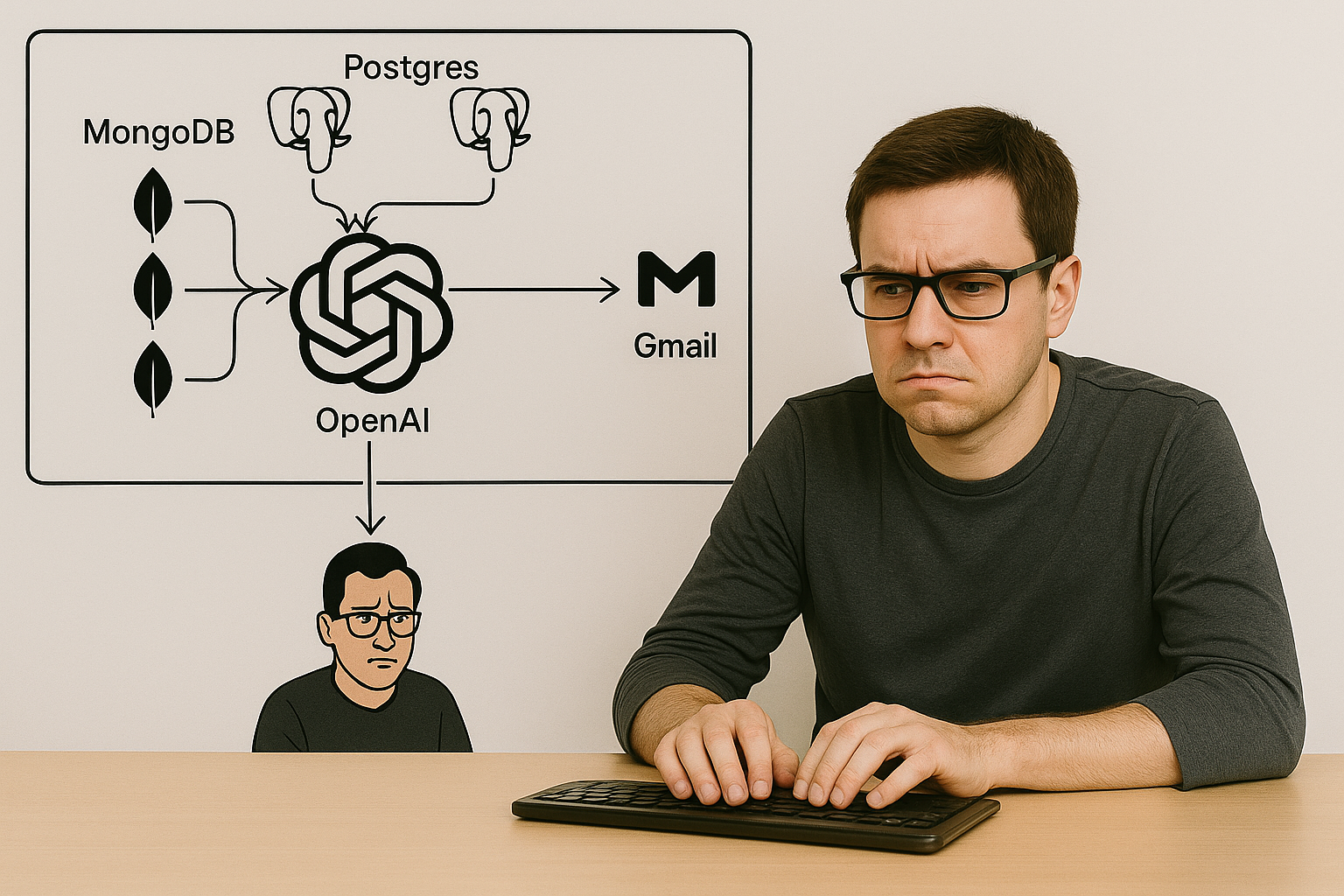

Let’s say a developer needs to integrate Postgres, MongoDB, OpenAI, and Gmail in his project, which takes the input from Postgres, MongoDB and give to the LLM and then LLM process the data with Natural Language Processing(NLP) and share the output to Gmail for further procedure.

Also he may need to write the flow for fetching data from Postgres to MongoDB and vice versa, which then becomes a high level task, since both are totally different.

So here the Context(data which is fed to LLM) was Postgres, MongoDB. So this can be implemented in many ways.

Say Developer A wrote some system_prompt(context) to feed the LLM in a way.

Developer B would have taken a different approach to feed the context.

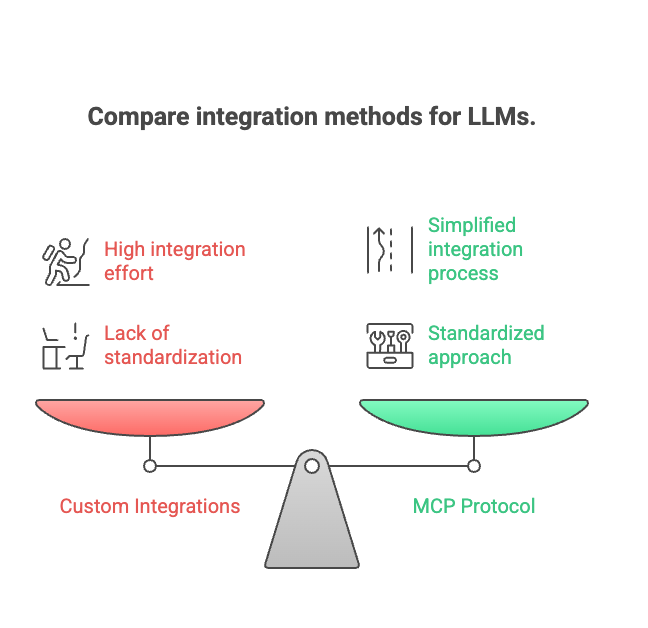

Now for this they need to write the custom integration for all of them. Sounds good? Maybe yes.

Now say you need to integrate the same thing in your organization, so you need to write the custom integrations for that even if that is already being solved by any developer .

Don’t you feel a gap here? What if somebody had shared the tools that I would have integrated easily in my project and I just run some commands and my project has all the capabilities of those integrations.

Such as I would need to run/add some commands.

pip install postgres-mcp mongodb-mcp openai-mcp gmail-mcp #this is just an example, you’ll see later how to add any server in your project

And you have all the tools in your project.

So this was the problem the MCP solved for us.

Now let's understand the MCP.

What is MCP?

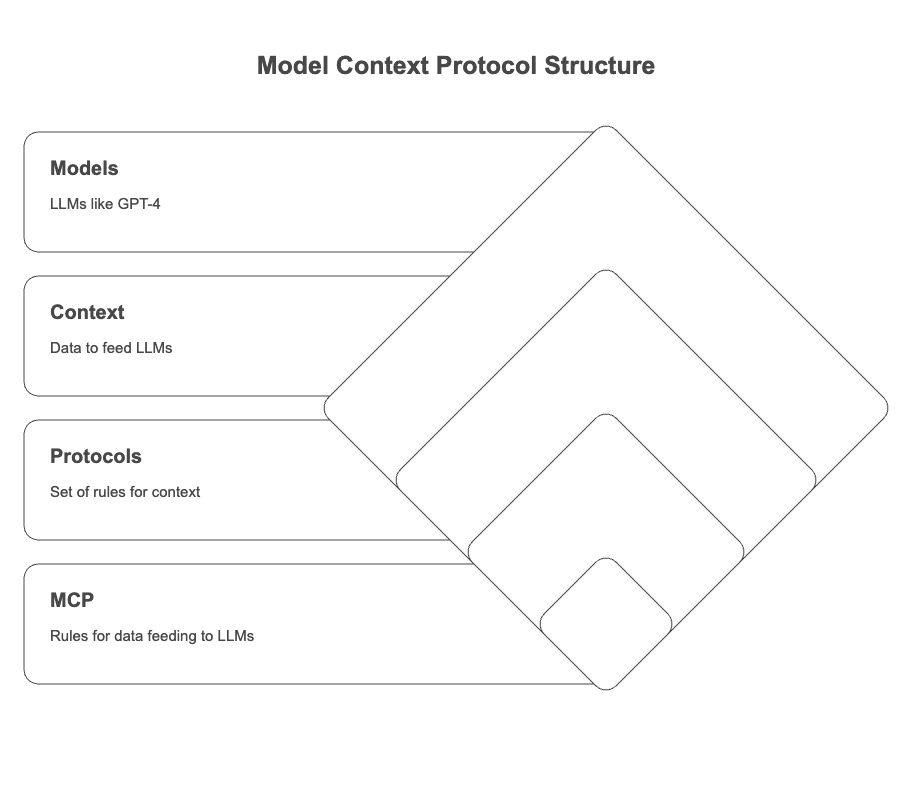

MCP stands for “Model Context Protocol”.

Breaking down the words,

- Models means the LLM, like GPT- 4, Deepseek-R1, Gemini 2.0 Flash.

- Context means some data to feed.

- Protocols means some set of rules.

So, Rules for feeding data to LLMs or Protocols to feed Context to Models is MCP.

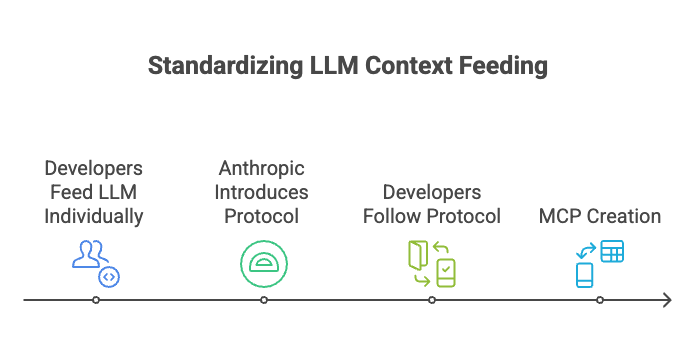

Before this, all developers were making their own way to feed the LLM, but then Anthropic provides a protocol, which says, if you want to feed the context to LLM, then follow these steps(The steps are shared while making the MCP Servers in a later section).

So now they standardized the way on how to feed the context to LLM, so any developer or a company now needs to follow those steps and they will end up creating an MCP.

MCP Architecture

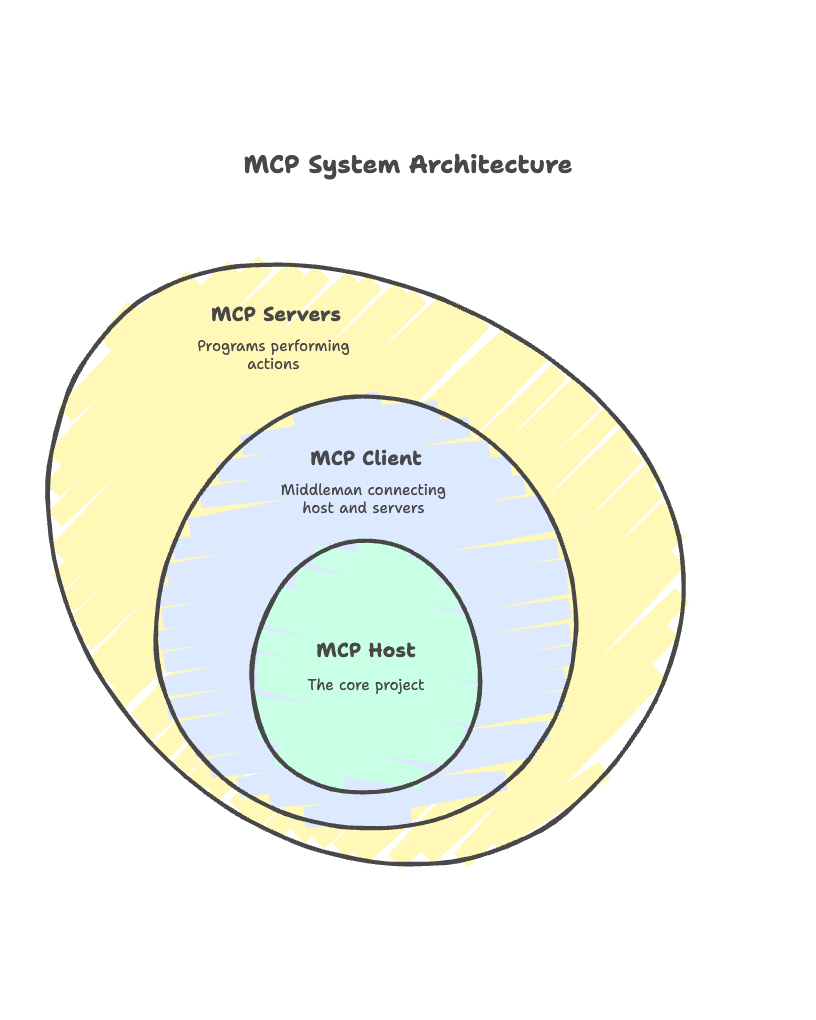

The Architecture have:

- MCP Host: This is the project which we call MCP Host.

- MCP Client: This works as a middle man, which connects MCP Host(project) to MCP Server. IDEs like Claude, Cursor or any other tool capable of it.

- MCP Servers: These are the servers/programs which perform some action, and give the result to the MCP client.

Let’s understand the architecture by taking an example.

Say, I have created a project, where the user gets the notification of the weather at any point of time.

So to use the MCP server, the user will enter a prompt like,”At 10 AM everyday kindly notify me with the weather in fahrenheit.”

So the Client will find out that “the user is asking for the temperature in fahrenheit at 10 AM every day.”

So Client will first call the

MCP Server A to find the weather temperature,then

MCP server B to convert that temperature to celsius to fahrenheit, then

MCP Server C which notifies the user with the given data at 10 AM everyday.

So till now you might have an idea of MCP and its architecture, and what all are the components of it.

Next, let’s understand the Protocol

The Protocol - How Tools Communicate

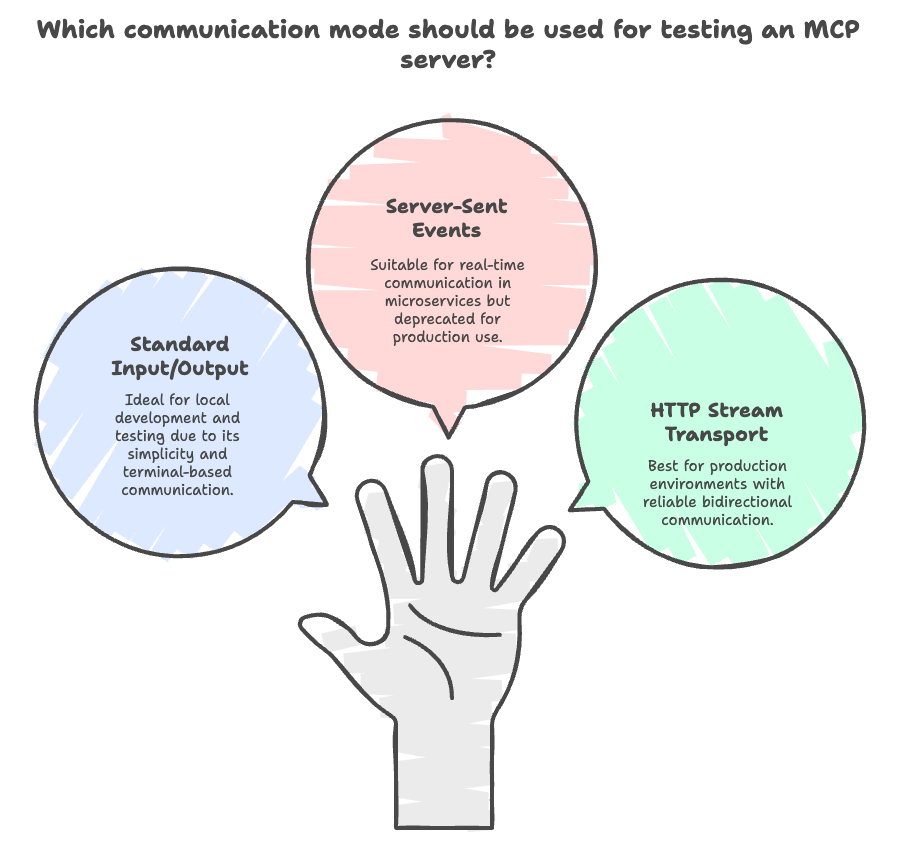

When a developer creates an MCP server, and he wants to test it, how can he do that, since the client must be connected to the server using any source right?

MCP currently supports two modes of transport to communicate from Client to Server and Vice Versa.

Those are:

-

Standard Input/Output (stdio)

- Good for local dev/test

- Tools run in terminal

- Client communicates via stdin/stdout

-

Server-Sent Events (SSE) (Deprecated)

- Perfect for hosted/public tools

- Enables real-time communication

- Ideal for microservices and production-ready use

-

HTTP Stream Transport

- Modern replacement for SSE

- Supports bidirectional communication

- Better for production environments

- More reliable than SSE for web-based integrations

In the next section we will make the MCP server and use that in all three modes of transport discussed above.

Building an MCP Server

Here we are creating an MCP server which adds or multiplies the number and gives the result. This is using Python.

Follow the steps to create.

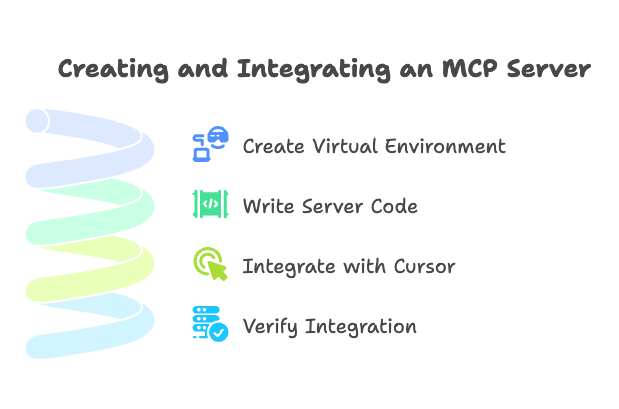

Step1: Create the virtual environment and install all dependencies

First create a virtual environment and activate it using

python -m venv mcp-env source mcp-env/bin/activate # For Windows: mcp-env\Scripts\activate

Install the mcp using:

pip install "mcp[cli]"

To store all them in requirements.txt run the command

pip freeze > requirements.txt pip install -r requirements.txt

Make sure you have installed all the dependencies.

Step2: create a file and write the code (stdio)

Now create a file calc-server.py in the same directory

#calc-server.py from mcp.server.fastmcp import FastMCP mcp = FastMCP("Calculation") @mcp.tool() def add_numbers(a: int, b: int) -> int: """Add two numbers and returns the sum""" return a + b @mcp.tool() def multiply_numbers(a: int, b: int) -> int: """Multiply two numbers together and returns the product of both""" return a * b if __name__ == '__main__': mcp.run()

Below is the flow of the code

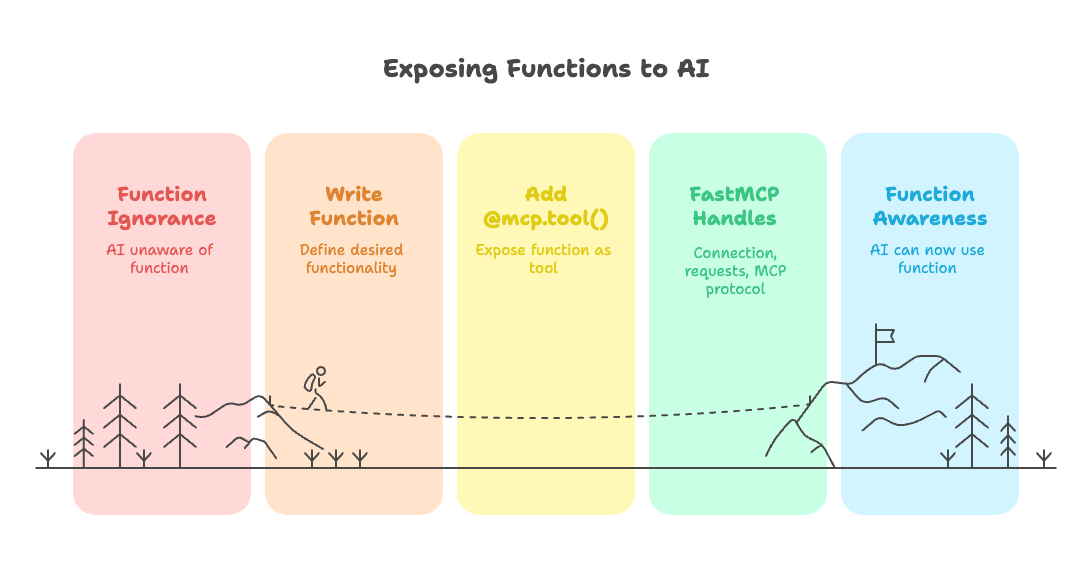

- First, we import FastMCP, which is basically a toolkit that makes it easy to build MCP servers, which is like a foundation.

- Next, we create our server and give it a name - in this case, "Calculation". This tells everyone, including AI models, what kind of tasks this server handles.

- Then we write normal Python functions that we want. Here I wrote a simple addition and multiplication function.

- Now just wrap that with @mcp.tool() decorator on top of each function. So it tells AI that Hey! AI you can use me.

- At last, we start everything with mcp.run(), which runs our server.

Why this matters:

The @mcp.tool() decorator is necessary to tell AI that it’s a function that you can use, else the AI won’t know about it. So now the AI is able to understand that wherever @mcp.tool() is written, means this is a tool that I can call.

FastMCP does all the heavy lifting behind the scenes - handling connections, managing requests, and making sure everything follows the MCP protocol properly.

Simple workflow:

Write function → Add @mcp.tool() → AI can use it

That's it! The MCP server exposes your Python functions as external tools for AI models to use.

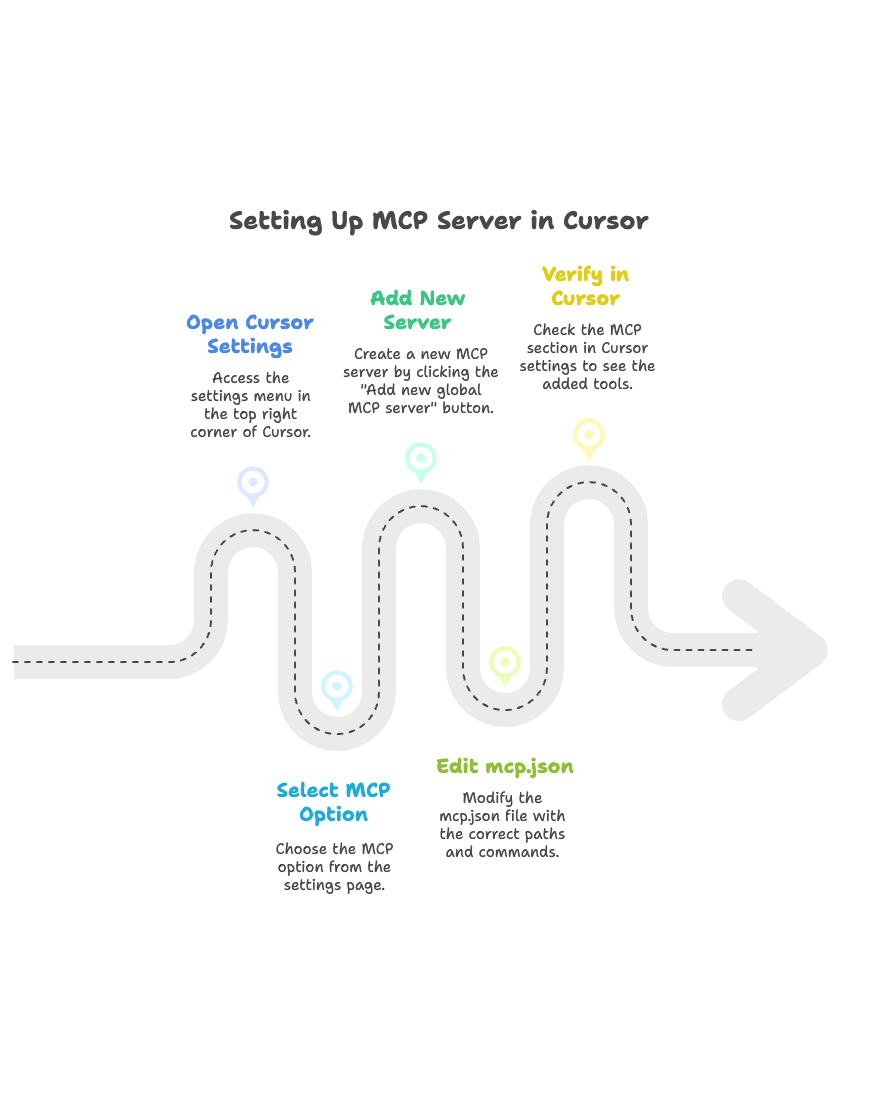

Step3: Integrate that in Cursor

Once you have done that all, now it’s time to integrate that with the Cursor.

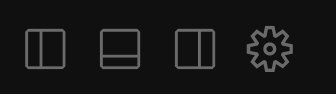

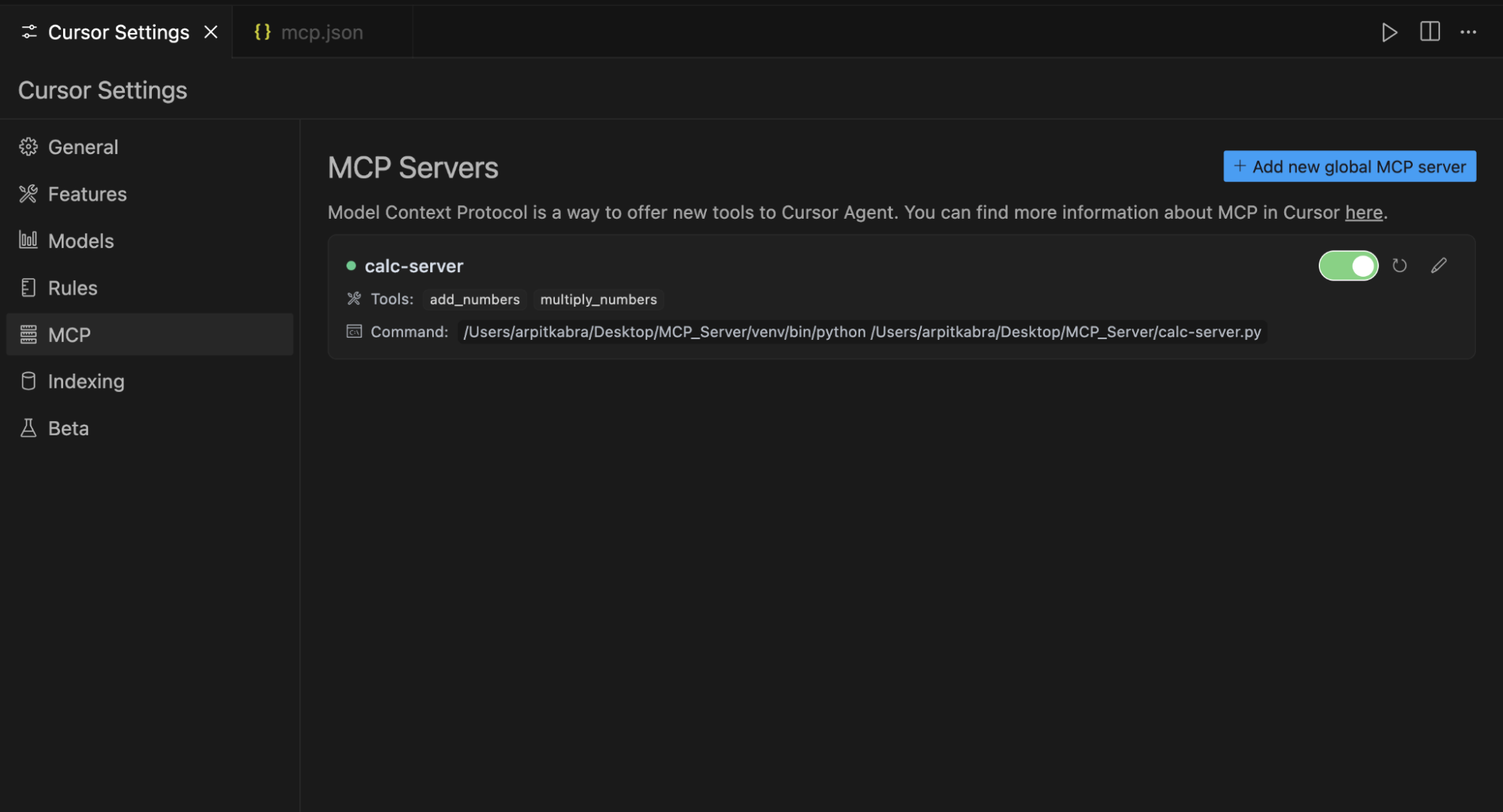

Go to the cursor settings.

You’ll find this at top right most corner as wheel

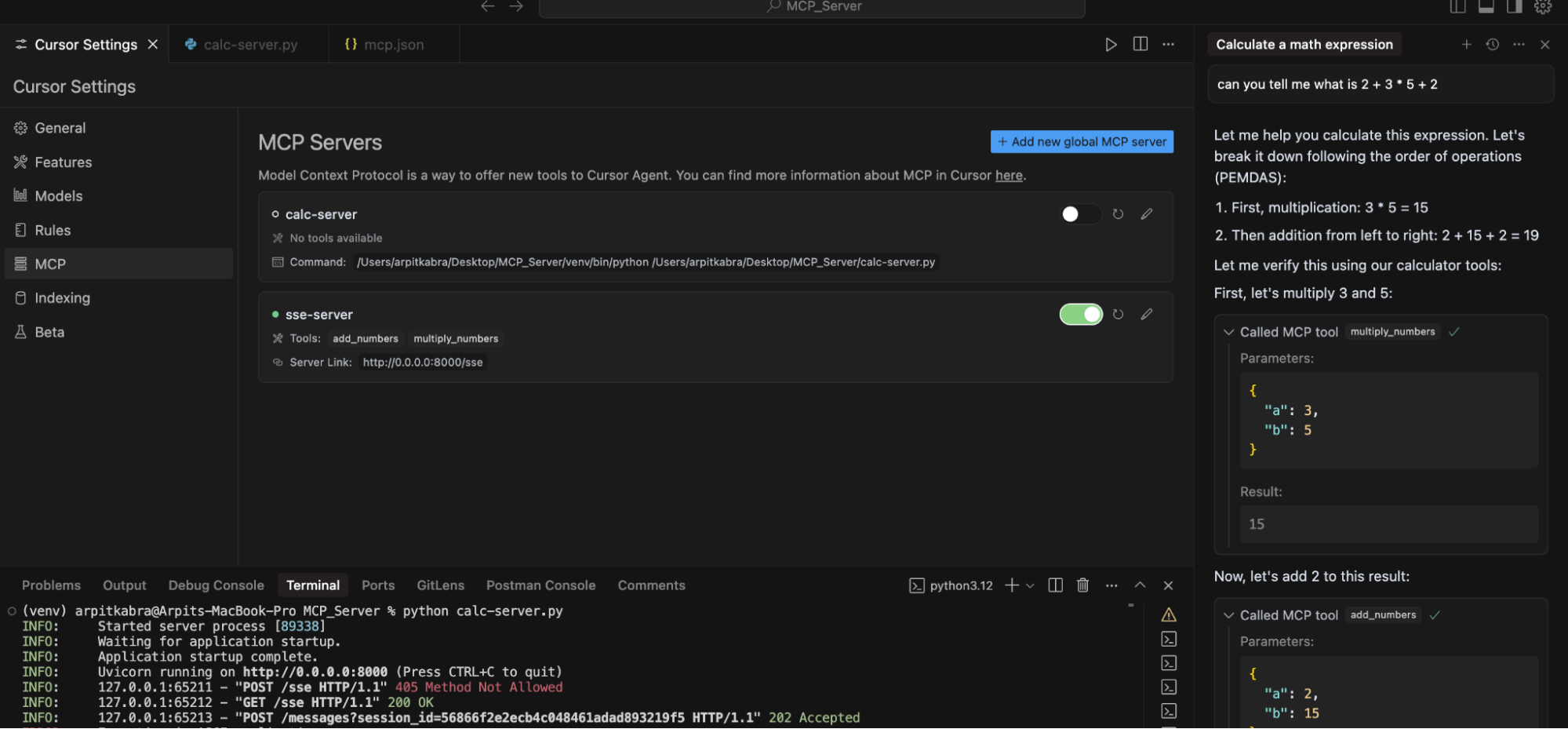

After clicking this, you’ll land on the settings page, select the MCP option there. You’ll see an interface like this.

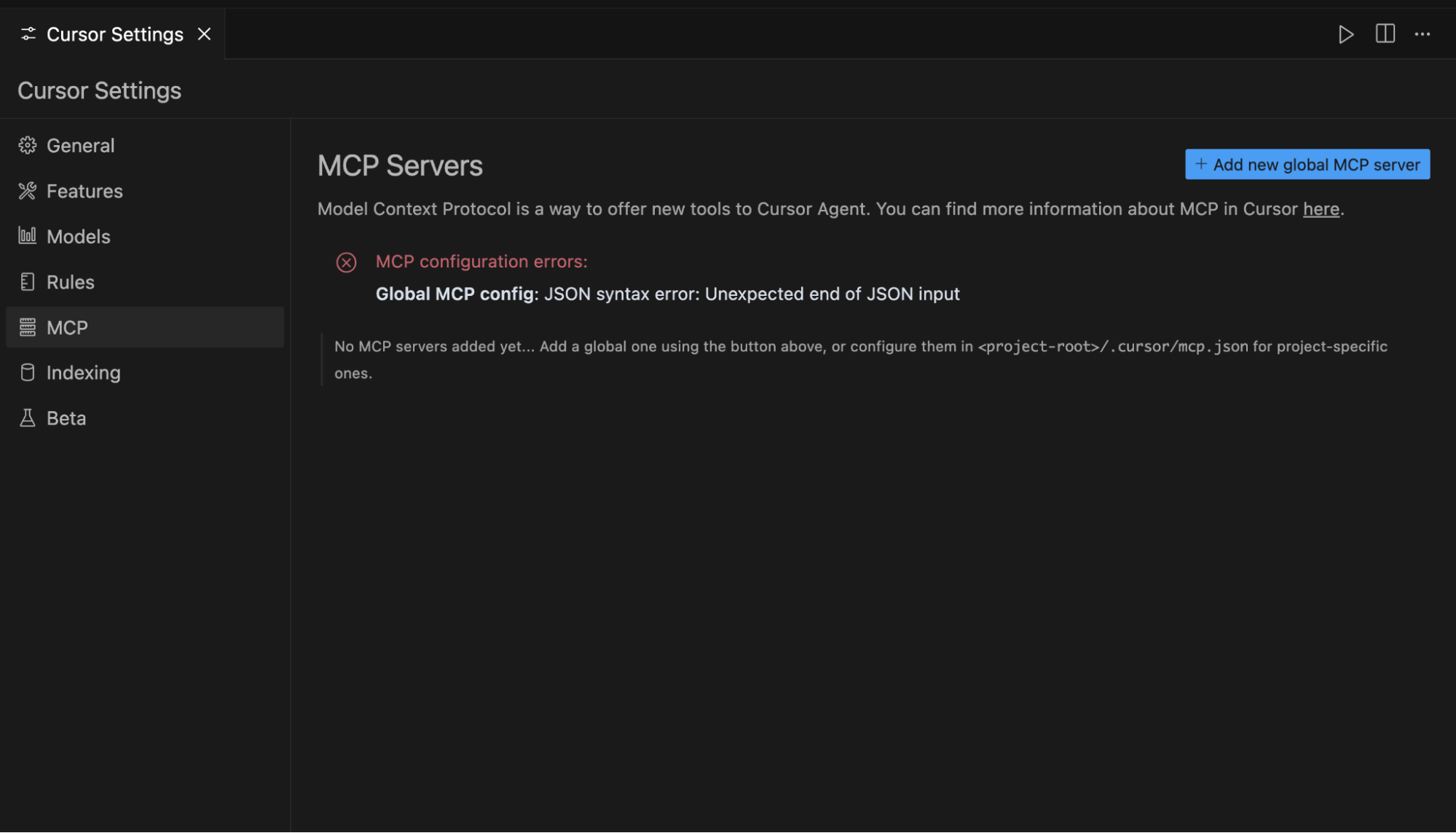

Now click on the “Add new global MCP server”, a file with name mcp.json will be created, there you place this

{ "mcpServers": { "calc-server": { "command": "path-to-python/python", #which python "args": [ "path-to-directory/calc-server.py" #{pwd}/calc-server.py ], "description": "A simple MCP server to add and multiply the numbers" } } }

In the below image you can see that in the “command” field make sure to write the whole path and not just python, since that might not work.

So you can write “which python” in terminal, and the result you get, paste that in the command field.

And for args: write “pwd” which gives present working directory and then /function-name.py

So it’s like running python function-name.py, but with a whole path. This is what the stdio is, since here we are using the terminal as the transport.

Once all these steps get correct, close the mcp.json and go to the MCP section in cursor settings, and you’ll see this.

You can see that in Tools, we have add_numbers and multiply_number and the command below which we gave there.

If you are able to see that, then congratulations, you’ve made an MCP server.

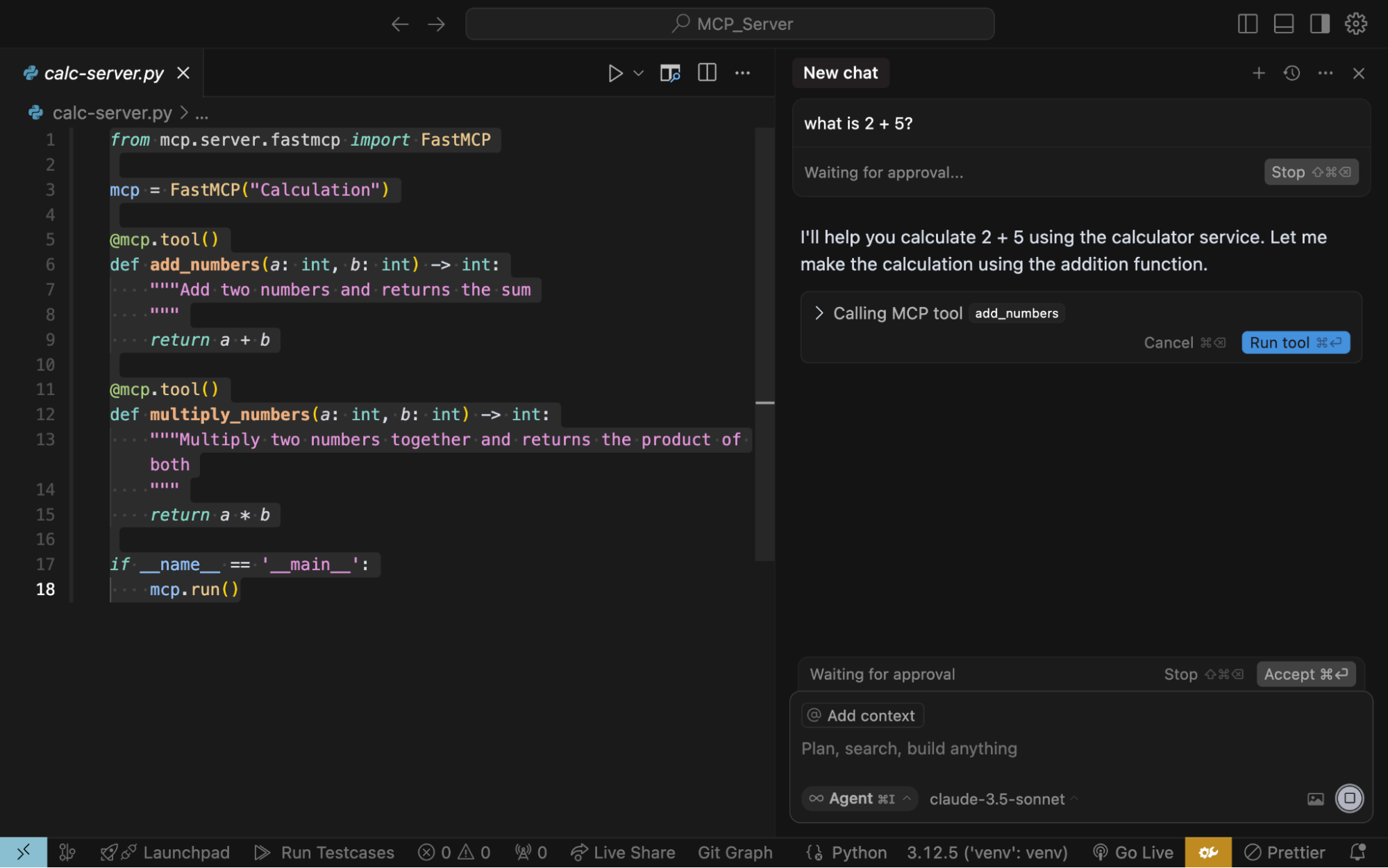

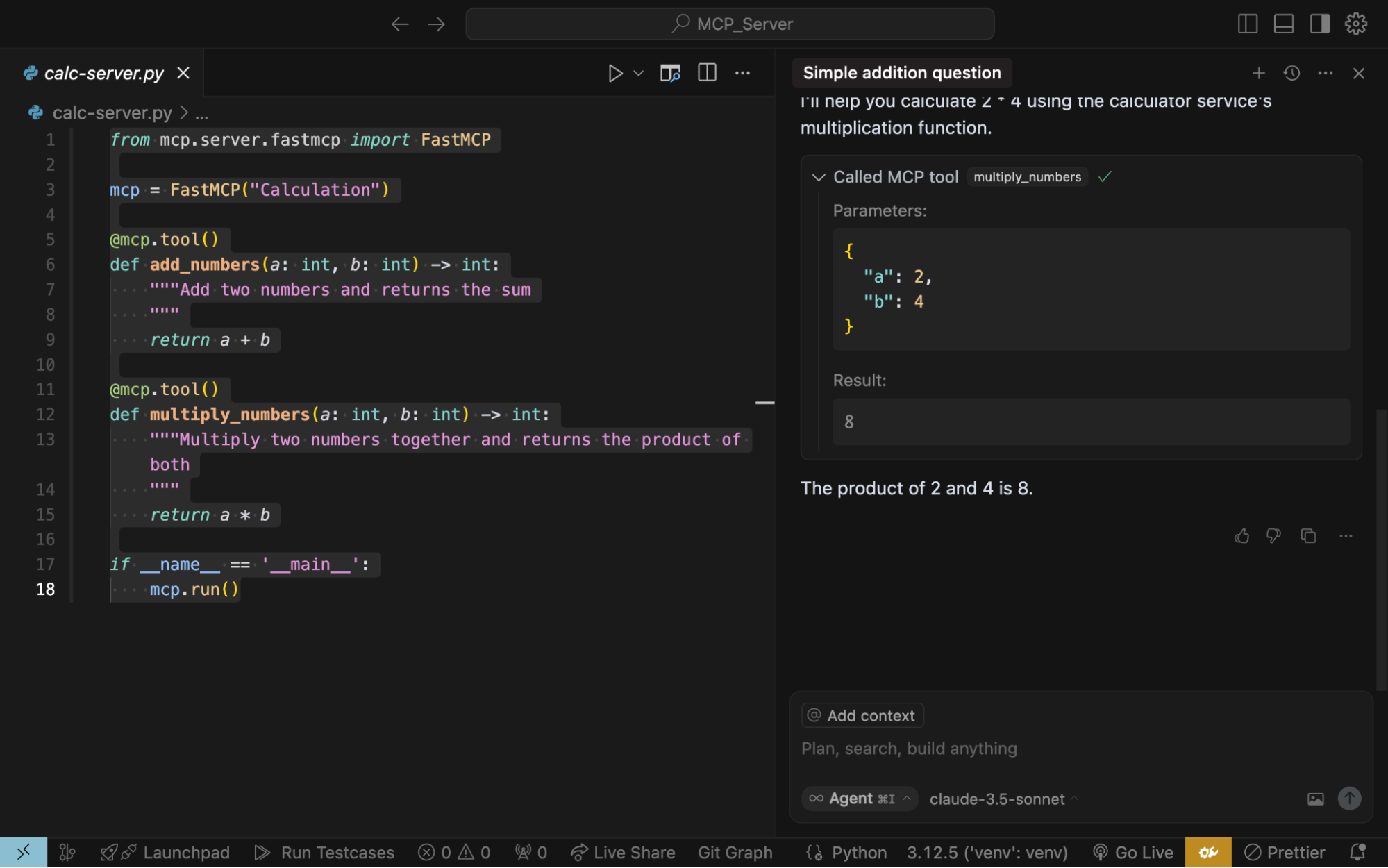

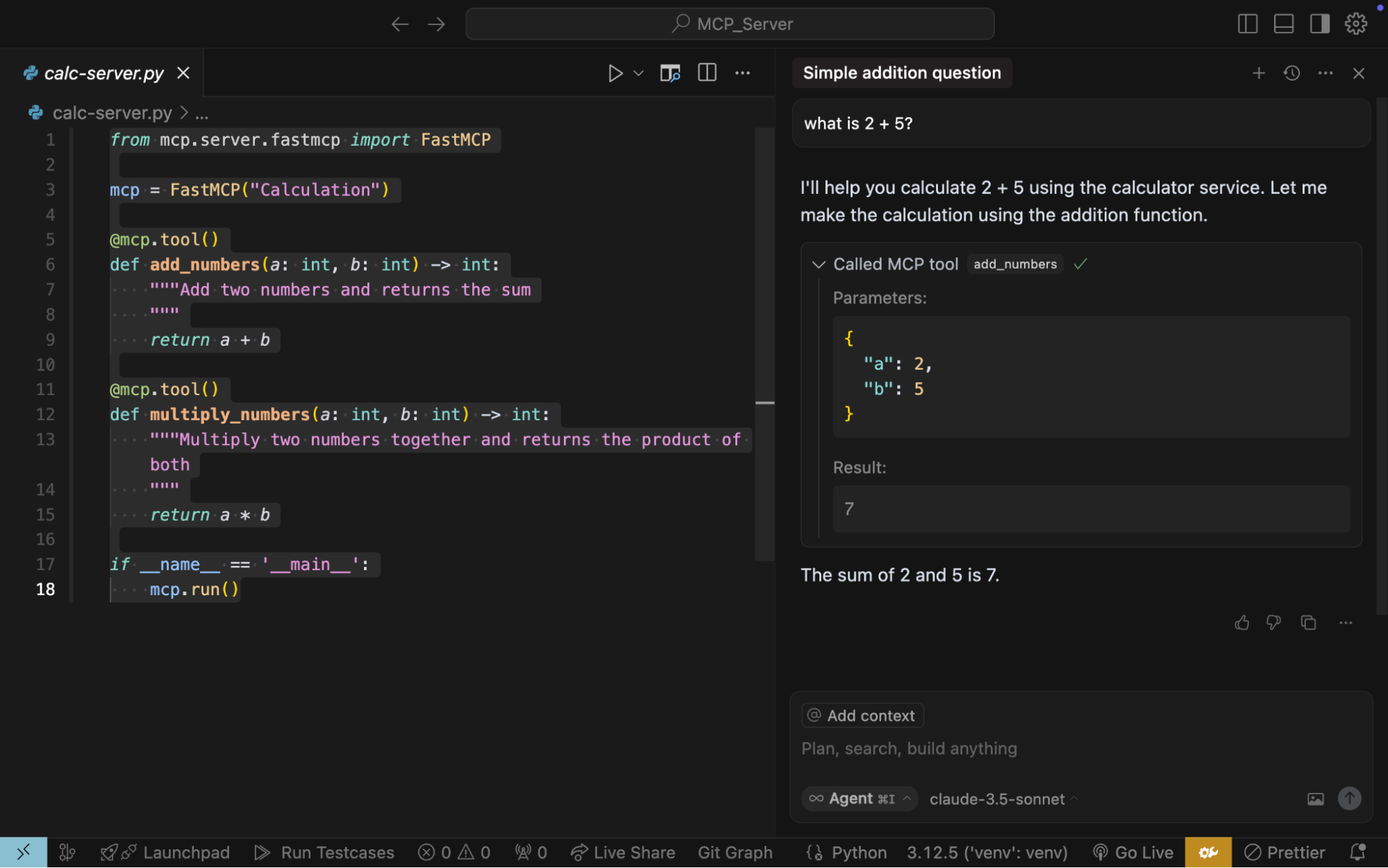

Now let’s test it.

Great🥳, you can see that the LLM call our MCP tool with the parameters and given us the output.

Now the question arises, does the LLM do all those things itself, the answer is yes, it does.

You don’t need to do anything for that.

Creating Using Server Sent Events (SSE)

# calc-server.py from mcp.server.fastmcp import FastMCP from mcp.server.sse import SseServerTransport from starlette.applications import Starlette from starlette.routing import Route from starlette.requests import Request from starlette.responses import JSONResponse # Initialize FastMCP server for Calculator tools with SSE mcp = FastMCP("calculator") sse = SseServerTransport("/messages") @mcp.tool() def add_numbers(a: int, b: int) -> int: """Add two numbers and returns the sum """ return a + b @mcp.tool() def multiply_numbers(a: int, b: int) -> int: """Multiply two numbers together and returns the product of both """ return a * b async def handle_sse(request: Request): try: async with sse.connect_sse( request.scope, request.receive, request._send, ) as (read_stream, write_stream): # Create initialization options once per connection init_options = mcp._mcp_server.create_initialization_options() await mcp._mcp_server.run( read_stream, write_stream, init_options, ) except Exception as e: return JSONResponse( {"error": str(e)}, status_code=503 ) async def handle_messages(request: Request): return await sse.handle_post_message(request.scope, request.receive, request._send) # Initialize the server with routes routes = [ Route("/sse", endpoint=handle_sse), Route("/messages", endpoint=handle_messages, methods=["POST"]), ] # Create the application app = Starlette(routes=routes) if __name__ == "__main__": import uvicorn # Initialize the server before starting init_options = mcp._mcp_server.create_initialization_options() uvicorn.run(app, host="0.0.0.0", port=8000)

And for mcp.json we need to give the url where we have hosted this.

{ "mcpServers": { "sse-server": { "url": "http://0.0.0.0:8000/sse" } } }

Below are the Screenshots, which tells the calling of the message to the function using SSE.

Make sure to run the python script using python calc-server.py if using the SSE.

Now the SSE is replaced by the HTTP Stream Transport.

HTTP Stream Transport and Server-Sent Events (SSE) are both used for streaming data from a server to a client, but they differ in their approach and capabilities. HTTP Stream Transport offers a more flexible and modern transport layer that supports both batch responses and streaming via SSE, while SSE is a specific protocol for one-way server-to-client streaming.

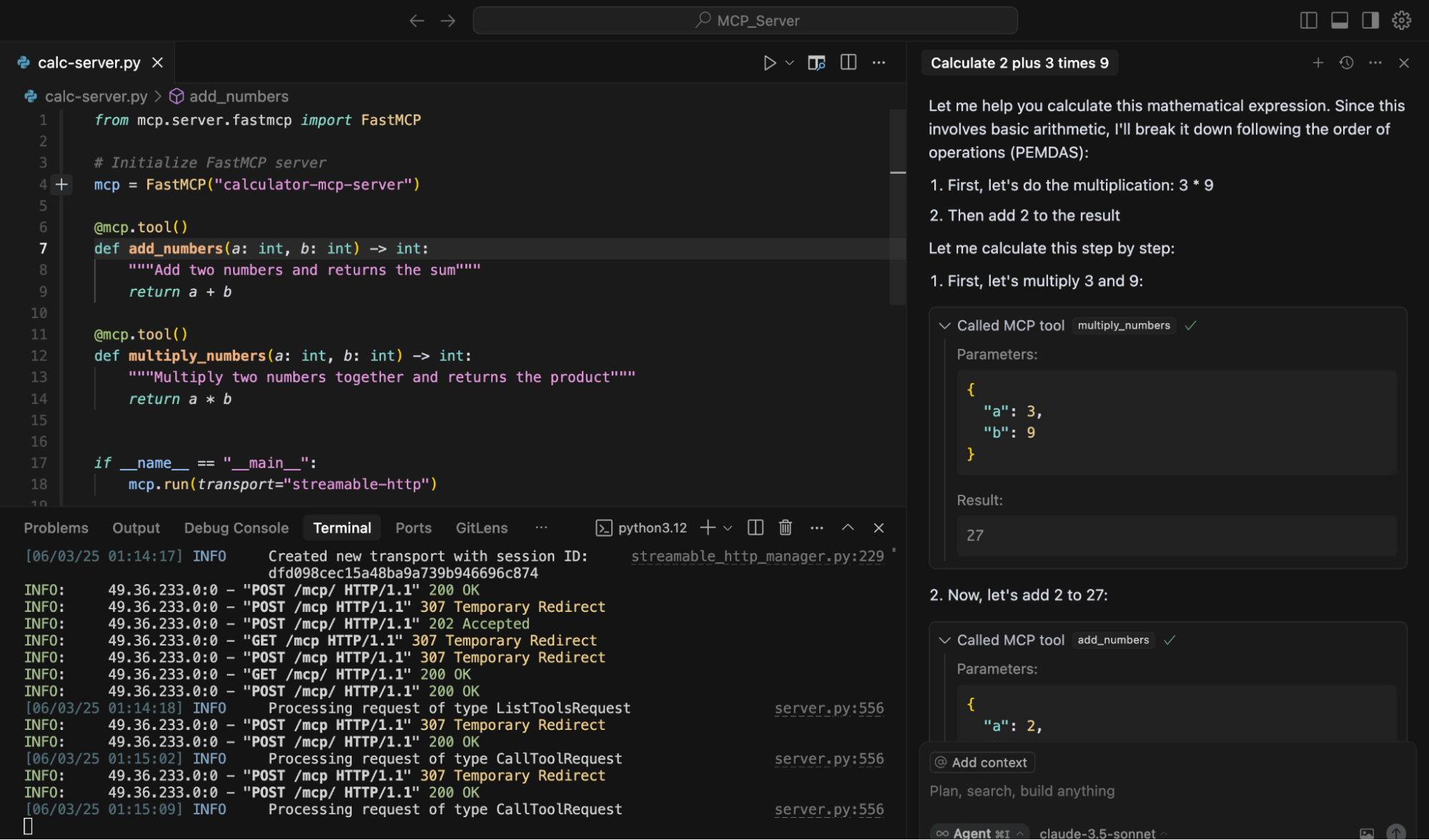

Creating MCP Server using HTTP Stream Transport

#calc-server.py from mcp.server.fastmcp import FastMCP # Initialize FastMCP server mcp = FastMCP("calculator-mcp-server") @mcp.tool() def add_numbers(a: int, b: int) -> int: """Add two numbers and returns the sum""" return a + b @mcp.tool() def multiply_numbers(a: int, b: int) -> int: """Multiply two numbers together and returns the product""" return a * b if __name__ == "__main__": mcp.run(transport="streamable-http")

Here I run the FastMCP with transport = “streamable-http”

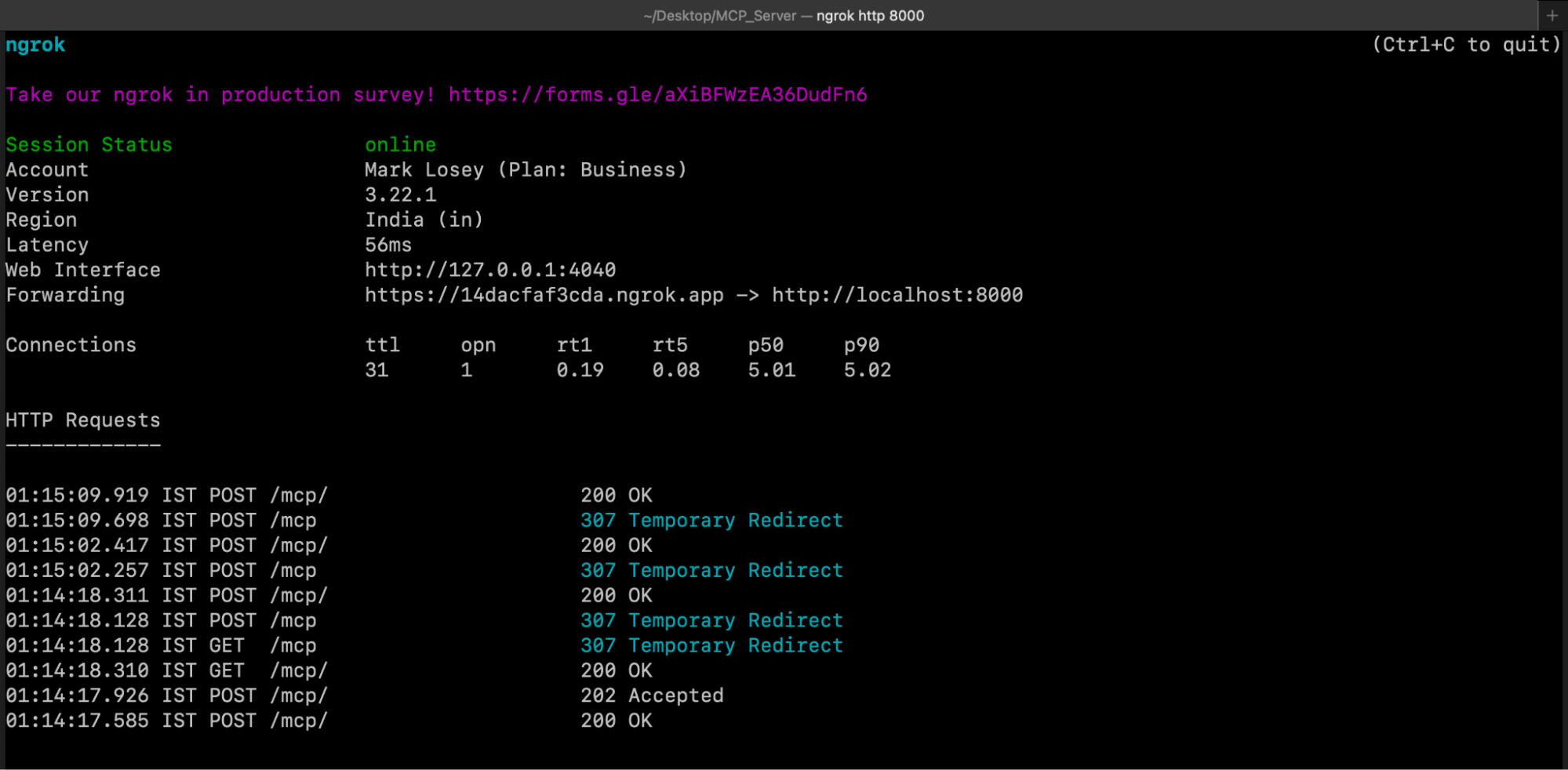

That’s it, now using ngrok http 8000 in the terminal, I redirect localhost:8000 to some other endpoint which is sharable and set that endpoint in my mcp.json file.

{ "mcpServers": { "calc-servser": { "url": "https://14dxxxxf3cda.ngrok.app/mcp" #change with your ngrok url } } }

Run the calc-server.py file using python calc-server.py

Once the terminal gets started, you can now ask on the IDE any question which uses your tool, in my case it was addition and multiplication.

So you can see that here I ran my MCP server using the HTTP Stream Transport, if I shared my endpoint url of ngrok, then any user can just place that in his mcp.json and use my server without having them in their local machine.

So, These were the two built in transport which are provided by the MCP.

Another is Custom Transport which MCP provides.

MCP is setting a new standard in how LLMs connect to tools, APIs, and real-world data.

Now it’s your turn — try creating your own MCP server and power up your AI workflows! ⚡

About the Author

I am Arpit Kabra, a Solution Engineer at Hashtrust Technologies, currently working in the exciting and fast-evolving GenAI space. I’m passionate about building real-world solutions that combine AI agents, LLMs, and practical tools to simplify complex tasks for developers and users.

About Hashtrust

Hashtrust is a product engineering and innovation company helping startups and enterprises build intelligent solutions using AI agents, GenAI, and multi-agent systems. From rapid POCs to production-grade platforms, Hashtrust empowers teams to turn ideas into impactful, scalable tech products.

Explore more at hashtrust or reach out at support@hashtrust.in.